We're growing accustomed to thinking about the greenhouse gas impact of transportation and energy production, but nearly everything we do leaves a carbon footprint. If it requires energy to make or do, chances are, some carbon was emitted along the way. But these are the early days of the climate awareness era, and it's not yet habit to consider the greenhouse implications of otherwise prosaic actions.

So as an exercise, let's examine the carbon footprint of something commonplace -- a cheeseburger. There's a good chance you've eaten one this week, perhaps even today. What was its greenhouse gas impact? Do you have any idea? This is the kind of question we'll be forced to ask more often as we pay greater attention to our individual greenhouse gas emissions.

Burgers are common food items for most people in the US -- surprisingly common. Estimates for the average American diet range from an average of about one per week, or about 50/year (Fast Food Nation) to as many as three burgers per week, or roughly 150/year (the Economist, among other sources). So what's the global warming impact of all those cheeseburgers? I don't just mean cooking the burger; I mean the gamut of energy costs associated with a hamburger -- including growing the feed for the cattle for beef and cheese, growing the produce, storing and transporting the components, as well as cooking.

The first step in answering this question requires figuring out the life cycle energy of a cheeseburger, and it turns out we're in luck. Energy Use in the Food Sector (PDF), a 2000 report from Stockholm University and the Swiss Federal Institute of Technology, does just that. This highly-detailed report covers the myriad elements going into the production of the components of a burger, from growing and milling the wheat to make bread, to feeding, slaughtering and freezing the cattle for meat -- even the energy costs of pickling cucumbers. The report is fascinating in its own right, but it also gives us exactly what we need to make a relatively decent estimation of the carbon footprint of a burger.

Overall, the researchers conclude that the total energy use going into a single cheeseburger amounts to somewhere between about 7 and 20 megajoules (the range comes from the variety of methods available to the food industry).

The researchers break this down by process, but not by energy type. Here, then, is a first approximation: we can split the food production and transportation uses into a diesel category, and the food processing (milling, cooking, storage) uses into an electricity category. Split this way, the totals add up thusly:

Diesel -- 4.7 to 10.8 MJ per burger

Electricity -- 2.6 to 8.4 MJ per burger

With these ranges in hand, we can then convert the energy use into carbon dioxide emissions, based on fuel.

Diesel is straightforward. For

electricity, we should calculate the footprint using both natural gas and coal, as their carbon emissions vary considerably. (If you're lucky enough to have your local cattle ranches, farms and burger joints powered by wind farm, you can drop that part of the footprint entirely.) The results:

Diesel -- 350 to 800 grams of carbon dioxide per burger

Gas -- 416 to 1340 grams of carbon dioxide per burger

Coal -- 676 to 2200 grams of carbon dioxide per burger

...for a combined carbon dioxide footprint of a cheeseburger of 766 grams of CO2 (at the low end, with gas) to 3000 grams of CO2 (at the high end, with coal). Adding in the carbon from operating the restaurant (and driving to the burger shop in the first place), we can reasonably call it somewhere between 1 kilogram and 3.5 kilograms of energy-based carbon dioxide emissions per cheeseburger.

But that's not the whole story. There's a little thing called methane. It's a greenhouse gas that is, pound for pound, about 23 times more effective a greenhouse gas than carbon dioxide. It's also something that cattle make, in abundance.

By regulation, a beef cow must be at least 21 months old before going to the slaughterhouse; let's call it two years. A single cow produces about 110 kilos of methane per year in manure and what the EPA delicately calls "enteric fermentation," so over its likely lifetime, a beef cow produces 220 kilos of methane. Since a single kilo of methane is the equivalent of 23 kilos of carbon dioxide, a single beef cow produces a bit more than 5,000 CO2-equivalent kilograms of methane over its life.

A typical beef cow produces approximately 500 lbs of meat for boneless steaks and ground beef. If we assume that the typical burger is a quarter-pound of pre-cooked meat, that's 2,000 burgers per cow. Dividing the methane total by the number of burgers, then, we get about 2.6 CO2-equivalent kilograms of additional greenhouse gas emissions from methane, per burger, or roughly as much greenhouse gas produced from cow burps (etc.) as from all of the energy used to raise, feed or produce all of the components of a completed cheeseburger!

That's a total of 3.6-6.1 kg of CO2-equivalent per burger. If we accept the ~3/week number, that's 540-915 kg of greenhouse gas per year for an average American's burger consumption. And for the nation as a whole?

300,000,000 citizens

* 150 burgers/year

* 4.35 kilograms of CO2-equivalent per burger

/ 1000 kilograms per metric ton

= 195,750,000 annual metric tons of CO2-equivalent for all US burgers

That's at a lower-than-average level of kg/burger.

Even with the lower claim of one cheeseburger per week, for an average American, the numbers remain sobering.

300,000,000 citizens

* 50 burgers/year (~Fast Food Nation)

* 4.35 kilograms of CO2-equivalent per burger

/ 1000 kilograms per metric ton

= 65,250,000 annual metric tons of CO2-equivalent for all US burgers

Those numbers are big, impressive, and probably meaningless.

So let's convert that to something more visceral. Let's compare to the output from a more familiar item: an SUV.

A Hummer H3 SUV emits 11.1 tons (imp.) of CO2 over a year; this converts to about 10.1 metric tons, so we'll call it 10 to make the math easy.

195,750,000 annual metric tons of CO2-equivalent for all US burgers

/10 metric tons of CO2-equivalent per SUV =19.6 million SUVs

----or----

65,250,000 annual metric tons of CO2-equivalent for all US burgers

/10 metric tons of CO2-equivalent per SUV =6.5 million SUVs

To make it clear, then:

the greenhouse gas emissions arising every year from the production and consumption of cheeseburgers is roughly the amount emitted by 6.5 million to 19.6 million SUVs. There are now approximately 16 million SUVs currently on the road in the US.

Will this information alone make a difference? Probably not; after all, nutrition info panels on packaged foods didn't turn us all into health food consumers. But they will allow us more informed choices, with no appeals to not knowing the consequences of our actions.

This was, ultimately, an attempt to take a remarkably prosaic activity and parse out its carbon aspects. After all, we're all increasingly accustomed to recognizing obvious, direct carbon emissions, but we're still wrapping our heads around the secondary and tertiary sources. Exercises like this one help to reveal the less-obvious ways that our behaviors and choices impact the planet and our civilization.

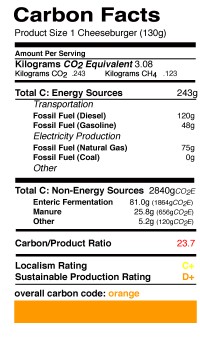

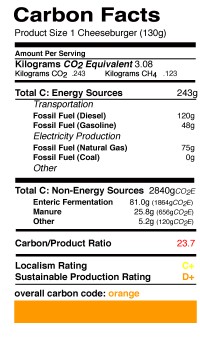

I doubt that we'll have to go through this process with everything we eat, from now until the end of the world. As our societies become more conscious of the impact of greenhouse gases, and the need for very tight and careful controls on just how much carbon we dump into the air, we'll need to create mechanisms for carbon transparency. Be they labels, icons, color-codes, or arphid, we'll need to be able to see, at a glance, just how much of a hit our personal carbon budgets take with each purchase.

The Cheeseburger Footprint is about much more than raw numbers. It's about how we live our lives, and the recognition that every action we take, even the most prosaic, can have unexpectedly profound consequences. The article was meant to poke us in our collective ribs, waking us up to the effects of our choices.

Original here