Nuclear power has a key part to play in providing the UK with reliable, low carbon electricity in the future, argues Peter Bleasdale. In this week's Green Room, he says a new National Nuclear Laboratory will provide the research and training needed after decades of neglect and decline.

|  In nuclear research and development, personnel numbers declined dramatically from about 9,000 in 1980 to just 1,000 a few years ago In nuclear research and development, personnel numbers declined dramatically from about 9,000 in 1980 to just 1,000 a few years ago  |

The UK government confirmed in January that it was in the country's long-term interest that nuclear power should play a role in providing Britain with clean, secure and affordable energy.

The UK government confirmed in January that it was in the country's long-term interest that nuclear power should play a role in providing Britain with clean, secure and affordable energy.

So why is nuclear power back on the national agenda? While there is no perfect answer and no perfect energy source, each method of generating electricity has advantages and disadvantages.

Like every other country, the UK is faced with the challenge of developing an energy programme that balances environmental issues, such as carbon emission reduction, with energy demand, security of supply and economics.

Secure supplies are especially important as even short lived power cuts can cause massive disruption. Nuclear is a traditional base-load supplier with high global reliability.

In the past, nuclear has been seen as a high-cost option when compared with other methods of electricity generation.

But a range of independent studies now show that full nuclear life-cycle costs are competitive with other sources. This competitiveness improves further when factors such as desirability of meeting policy objectives of cleaner, more secure power sources are taken into account.

Back to school

Bearing all of this in mind, UK ministers now believe it to be in the public interest to allow energy companies the option of investing in new nuclear power stations.

The UK has not built a nuclear power station for more than a decade |

These stations are more efficient than those they will replace and they must have a key role to play as part of the UK's energy mix.

The White Paper, Meeting the Energy Challenge, also restated the intention of establishing a National Nuclear Laboratory (NNL).

It will have the aim of providing the technologies and expertise to ensure the industry operates safely and cost effectively.

Ministers believe that the energy sector faces challenges in meeting the need for skilled workers in research and development, design, construction and operation of new nuclear power.

The NNL will be aligned with national policy on skills and will support and work alongside the newly created National Skills Academy for Nuclear (NSAN). The NNL's role will be vital in building nuclear scientific skills.

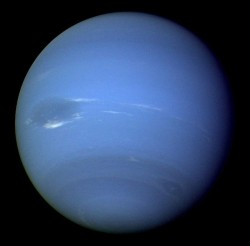

In nuclear research and development, personnel numbers declined dramatically from about 9,000 in 1980 to just 1,000 a few years ago.

Nexia Solutions, which the NNL will be based around, has already taken action to address the skills decline by working closely with the academic sector.

The NNL will safeguard and develop key scientific and technical skills and facilities that cannot be reliably supplied by the external marketplace.

The "RadBall" is one innovation to improve nuclear plants' operations |

It will build a technology skills pipeline back into industry, and will also have national and international influence in marketing and selling skills and technologies overseas.

One example of the NNL's innovative work already playing its part is the "RadBall", developed by Dr Steven Stanley, a research technologist.

In essence, it collects information about where, how much and the kind of radiation in inaccessible areas, removing the need for people to access the location.

The information gathered through a polymer-based "crystal ball" device is then turned into meaningful data by using new software.

Waste worries

While nuclear power is a tried-and-tested carbon free technology, and new stations are better designed and more efficient than those being replaced, it's true that many countries, including most of Western Europe, decided to suspend new development in the recent past.

|  The quantity of nuclear waste is extremely small when compared to overall national volumes of all toxic wastes The quantity of nuclear waste is extremely small when compared to overall national volumes of all toxic wastes  |

Concerns about the economics of new stations, the possibility of accidents, slow progress in dealing with nuclear waste and the historical link between nuclear energy and weapons resulted in a loss of confidence among politicians and the public.

With the UK being challenged to balance its commitments to tackling climate change while at the same time meeting rising energy demand and keep the lights on, nuclear generated electricity has returned to the energy agenda alongside other low carbon technologies.

But, before new nuclear stations are given the go ahead, the government will have to be satisfied that effective arrangements are in place to manage and dispose of the waste they produce.

All wastes can have a negative effect if released into the surrounding environment, but the amount of waste produced from nuclear power are very small by industrial standards and modern nuclear power stations are much more efficient than earlier examples.

Proportionally, the volume of radioactive waste needing to be managed will not increase very much, whether or not there is a future programme of nuclear power stations.

|  In supporting the resurgence of the nuclear industry in the UK, the function of the NNL is very clear - to provide the skills and technologies to support all aspects of a successful nuclear industry now and into the future In supporting the resurgence of the nuclear industry in the UK, the function of the NNL is very clear - to provide the skills and technologies to support all aspects of a successful nuclear industry now and into the future  |

The quantity of nuclear waste is extremely small when compared to overall national volumes of all toxic wastes.

Given the diversity of the nuclear programme, radioactive waste can vary in the amount of radiation it gives out so it is categorised into low-level, intermediate-level and high-levels.

A nuclear power station produces around 100 cubic metres of solid radioactive waste each year (about the volume of a lorry) and more than 90% of this is low-level.

Already there are significant volumes of historic wastes safely stored, and a programme of new reactors in the UK will only raise waste volumes by up to 10%.

Unlike fossil fuel waste, which is released into the atmosphere and ground, nuclear waste is carefully managed and contained.

In its role to provide the experts and technologies that support the nuclear industry in operating safely and cost effectively in the short and longer term, the NNL will deliver technological solutions that help deal with waste issues now and plans for the processing and management of waste in the future.

The NNL is closely involved in waste research and will provide close support for future waste management plans and strategies.

Disposal in a geological facility is a seen as the most viable long term solution and the right approach for managing waste from new nuclear power stations and legacy waste.

Safety first

The nuclear industry spends millions of pounds each year on safety to meet its own extremely stringent requirements and also those of several external regulating and advisory bodies.

The safety record of nuclear power reactors in the UK and in most of the world is excellent.

In the UK, before a nuclear power station is licensed, its owners must demonstrate that it is safe and prove that the likelihood of uncontrolled radioactivity escaping is, literally, less than one in a million for every year of the reactor's life.

Designers must also assume that human operators can make mistakes, so in traditional nuclear designs all protective systems must be duplicated or trebled.

More recently, the focus has moved towards "passive safety". Instead of relying on valves, pumps and other engineered features, designers are increasingly using the forces of nature - gravity or the fact that materials expand when they get hotter - to ensure safety.

Such an approach reduces the cost of building the reactor and increases the reliability and predictability of how the plant behaves under both normal and abnormal circumstances.

The NNL will also play a key role in supporting the licensing process for new nuclear reactors and other regulatory requirements in the UK.

It will also play an ongoing and crucial technology role in all aspects of nuclear new build and operation.

In supporting the resurgence of the nuclear industry in the UK, the function of the NNL is very clear - to provide the skills and technologies to support all aspects of a successful nuclear industry now and into the future.

Dr Peter Bleasdale is managing director of Nexia Solutions, a wholly owned subsidiary of BNFL Group, that will be developed into the National Nuclear Laboratory

The Green Room is a series of opinion pieces on environmental topics running weekly on the BBC News website

Do you agree with Peter Bleasdale? Does nuclear power play a vital role in providing reliable, low carbon electricity? Will a National Nuclear Laboratory plug the gap in the skills and research deficit? Or has the sector been proven to be an expensive white elephant?

We agree with the need to focus on skills development for the nuclear industry. We believe the most important of these skills is effective safety management. Safety management includes safety-related programs and processes, and the development and maintenance of a strong safety culture. Problems at existing nuclear plants are often accompanied by a safety culture that has gone awry. NNL and NSAN safety management training must recognize that safety and production goals will sometimes be in conflict, and that safety culture will deteriorate over time unless it is actively managed.

Lewis Conner, Powershift LLC, Kensington, California

I laugh when I hear arguments from green parties about the dreadful legacies of nuclear waste. Do they imagine that future technologies wil be unable to cope. If all else fails they may bury it under the huge mountains of waste form other processes! The real danger to our heirs will arise from wars fought over diminishing supplies of food, energy, clean air and water. Let us keep a sense of proportion and allow our scientists to deal with these shortages.

Larry, Barry, Wales

Not that I believe OldStone50's assertion below that nuclear power requires a "police state"; but if it is true then only police states will have nuclear power. Obviously nuclear power is part of the solution to the world's energy supply. Energy usage is bound to increase - to suggest otherwise is to say that we will never again use as much energy as we currently use. Two billion people don't even have access to electricity at the moment. It is a certainty that the world's energy usage will increase; and it will obviously have to be policed. Just in the same way that vehicles are licensed and traffic is policed in every developed country. This is not a symptom of a police state; it is a trait of technological civilisation. Humans have always looked for ways to manipulate larger quantities of energy, and this needs to be controlled by society. The suggestion that the solution is to reduce energy consumption reminds me of Miss World wishing for "world peace": it is superficially worthy but hopelessly naïve. Energy rationing is not a long-term solution. Somebody, somewhere will always take the high-energy route and will consequently dominate economically and politically. What we need is sustainable high-energy low-carbon sources; and nuclear fits that bill.

Colin, Glasgow

Can I give you all this link http://www.stormsmith.nl/ which shows that the CO2 emissions from nuclear power stations are the same as those from one powered by fossil fuel. The following quote is part of the summary. Some novel concepts are introduced, to make the results of this study better accessible: the, the 'energy cliff', the 'CO2 trap', the 'coal ceiling' and the 'energy debt'. Beyond the energy cliff the nuclear system cannot generate net useful energy and will produce more carbon dioxide than a fossil-fueled power station (CO2 trap). Nuclear power may run off the energy cliff with the lifetime of new nuclear build. Beyond the coal ceiling more uranium ore has to be processed each year to feed one nuclear power plant than the annual coal tonnage of coal consumed by a coal-fired power plant to generate the same amount of electricity. The only people to gain from nuclear power are those involved in the industry at the planets expense.

Martin, Scotland

People seem to be forgetting in 50 years or so, there will be little oil left, though much now re-economical coal.. What is going to plug the energy gap ? To power your central heating boiler, TV, car trains, cooking, street lighting etc. Forget about using renewables to power 15% of current needs, worry about what is going to power half of what we currently use sourced from oil/gas - Electricity generation, petrol, diesel, natural and bottled gas before you even discuss other oil uses - plastics and chemicals etc Nuclear waste, why not shoot it off into the Sun or into deep space. Don't put it on the Moon, I've seem Space 1999 and know what happens there :-)

Neil Postlethwaite, Warwickshire

To all those who complain that uranium mining causes emissions of carbon (dioxide, don't forget that word), they seem to forget that almost all mining and quarrying activity have similar effects. Also, to those who say that we are past 'peak uranium', the reserves of fissile material can be used far more efficiently with breeder reactors, whose waste products can themselves be used as fuel, which will last for 500,000 years or more. Nuclear power is by no means perfect, but seems to be by far the best option for a so-called 'low carbon' economy. One last thing, renewable energy is nothing of the sort! Any student of physics will be able to tell you that energy is transferred, not created or destroyed (aside from matter-antimatter annihilation and electron-positron pair production on an unimaginably small scale). Wind, tidal and solar energy are not limitless, they depend on indirect solar energy (for wind), direct solar energy for photovoltaic cells, and the kinetic energy and gravitation of the moon for the tides and these are not infinite.

Will Griffith, Exeter, Devon

I agree with Dr Bleasdale. Plus take a look at France and Finland to see what can be achieved with consistent nuclear power programmes. The Finns have waste disposal facilities and are building a new nuclear power station and planning another (their sixth unit).

Peter Holt, Berne, Switzerland

A FACT about N-waste. Certain nuclides[atom species]in waste can persist for thousands of human generations;this is a good feature not a drawback which is a common mis-conception.A fire which takes untold ages to die down emits negligible heat here and now,and by analogy the same applies to decaying nuclides.

Leonard Ainsworth, Lytham St.Annes UK

What a one-sided story that trumpets "benefits" without ever mentioning the many drawbacks. Surely the problem lies not in generating enough power to meet the demand, but reducing the demand to meet reality. Can the whole world use fission? I think not. It's just like John Travolta getting dressed up and re-oiled for the sequel to "Saturday Night Fever".... old, oily and wrong. Designing re-vamped reactors is stupid and selfish. Wear jumpers in the winter, insulate and generate power locally. It's so much simpler that waiting for a Buck Rogers style fusion reactor, or further destroying whatever beauty is left in the world.

Chris Harper, Yonago, Japan and Bristol

Bleasdale's failure is that of the engineer. He focuses narrowly on a micro-problem while assuming ceteras paribus, and he can't resist the attraction of the grand project, the silver bullet solution, the vision of massive concentrated power. In effect, he's a three year old boy, standing by the railroad tracks, staring wide-eyed at the train and being totally enthralled by it. It is probably possible to have many thousands of nuclear power stations all over the world cranking out energy. It may be possible to find basic fuels other than uranium, e.g., the realization of practicable fusion reactors. We might even learn to control toxic by-products safely and consistently. It might even be - with a real long stretch of the imagination - all economically efficient. But one thing that cannot be avoided with the use of nuclear power is the establishment and maintenance of strong, rigid, and absolutly centralized control. Nuclear power is a clear prescription for a police state because only through a police state can all the necessary safety measures be achieved and - much more difficult - maintained. Especially over hundreds or thousands of years. Turning off the lights is a much more rational, safe and democratic approach. And for those who have difficulty understanding metaphors, turning off the lights is a metaphor for energy use reduction.

OldStone50, Freiburg, Germany

Is there anyone else who thinks that the problem of leaving nuclear waste to future generations is insanely immoral ? What kind of inheritance is that for our children, grandchildren, great grandchilren for 1000s of years ? And does this set an example of responsibility ? Even if there are no accidents or leaks it is quite a responsibility to be given to future generations because we are unable to live within our means.

Simon Kellett, Darmstadt, Germany

On the subject of radioactive waste, I once read that a large coal fired power station such as Drax emits more radioactive material to the environment that theh average nuclear station. Do we have dual standards?

Doug Elliot, Ormskirk, Lancashire

I believe strongly that nuclear energy is the only realistic answer to the critical state our climate is currently in. Wemay well have already passed a tipping point in terms of greenhouse gases and positive feedback loops. It would please me greatly if people could stop harking back to Chernobyl, and linking nuclear energy to nuclear weapons. This blinkered view will only lead to global catastrophe. 'Renewable' or 'green' energies simply do not have the cpapcity to support energy needs. Take the example of biofuels, wonderful in theory but realistically, the space needed grow such a vast amount of the crops needed would probably do more overall planetary damage than the benefits gained from using biofuels. In addition,there would most likely be food shortages (as was claimed recently in Latin America). The best thing developed nations could probably do right now is offer to share existing nuclear technologies with developing nations. Allow developing nations to! skipthe step that developed nations went through and put us in this mess in the first place.

Olly Burdekin, Playa Del Carmen , Mexcio

It makes me laugh when people assume we have a choice. Renewables will never meet our energy requirements alone, unless we dramatically change our way of life. Now instead of worrying about the long term problems with nuclear fission I think we should look at it as a means to an end. This will give the nation chance to develop carbon free ways to go about their daily lives (hydrogen fuel cell cars, electric heating etc). Nuclear Fusion is an amazing way to create energy, a few grams of fuel (which is found in water and the earth) can power London for ages, its only waste is helium and neutrons. A prototype reactor is expected to open in 2017. Eventually all the fission reactors could be replaced.

Paul Holland, loughborough

Good Point Nic Borough (below). - Indeed the most efficient use of the worlds remaining Uranium 235, (whose mining operations are rapidly inflating the carbon footprint of Nuclear Power due to lower and lower grade ores now being mined), would be to build fast breeder reactors. So called because the can produce more fuel (in the form of Plutonium) than they use (in the form of U-235). These would ensure that fission could contribute to emmissions targets going forward. The problem and I think and the reason no-one is talking about it is that Plutonium is relatively easy to refine from spent fuel/ waste thus increasing the risk of proliferation of nuclear weapons should the global nuclear power industry go down the 'fast breeder' route. In my opinion it would be crazy not to develop and build fast breeder reactors, and in so doing squander the remaining economically available Uranium. Commercial Fusion is still potentially a long way away (50 yrs away and always will be as th! e old joke goes). Surely security concerns can be addressed in the way the meltdown/ radioactice release threat has been mitigated!

Barry Gallagher, London

The editorial is the fox guarding the chicken house (or BNFL Group looking out for your health vs the profit in building/running new plants). If the amount of nuclear waste is so SMALL compared to other waste then how come people don't store it in their backyards? Texans don't want BNFL here and we don't want the nuclear waste from the world brought here to the pristine desert and aquifers. That open desert actually controls global warming in a similar way to tree cover via desert crusts, and as a society we are getting too big to simply cart our waste over to "that open space" or some poor or disenfranchised neighbor. Britain, keep your nuclear waste in Britain -- and then tell us that nuclear waste is "small": its impact is HUGE and in terms of human-years, forever.

Heather, El Paso near Sierra Blanca, Texas

Nic Brough suggested squeezing more energy from uranium will be extremely costly and that nuclear power is at the end of its improvement cycle. This is not true. Most reactors in service today are so called thermal-spectrum reactors that burn only a few percent of uranium ( the fissile U-235 component ). More advanced fast neutron reactors can also burn the U-238 component thus giving between 60-100 times the energy for the same amount of ore and waste ( depending a little on what reactor you compare with ). Some of these designs (like the lead cooled fast reactor ) are likely to be cheaper than current technologies, mainly because molten metals have better thermodynamic properties than does pressurized water. The problem is that they are all on the prototype stage and construction of commercial plants is not expected to be feasible before the existing plants are to be shut down. Nevertheless claiming there is no room for improvement is simply wrong.

Jonatan Ring! , Lund, Sweden

lets dismantle this shall we? "life-cycle costs are competitive" Even if (and its a big if) costs were comparable to renewables, why have nuclear when you can renewables for the same cost? Comparing nuclear waste with other industrial waste is silly - if it were so why do we guard even moderate nuclear waste and not all industrial waste? If its so easy to deal with, why hasn't the existing nuclear waste been finaly disposed of? As for safety "one in a million(s)" do happen. If they happen in a nuclear environment the potential for disaster is far greater than say an accident at a coal plant. Remember Chernobyl? The decision for nuclear is a political one, not a rational one. Perhaps the money for a Nuclear Academy should be put into a 'Sustainable Academy', perhaps then we wouldn't need any nuclear stations?

Kevin, Coventry, Coventry

Unfortunately renewables simply will not be able fill the gap, at least not in the short term... No matter how much nuclear is villified, it remains the only viable, if expensive, solution to the problem. As for the issue of nuclear waste, some pretty nifty ideas have been bandied about lately, and I'm pretty convinced that, at least for the time it takes for renewables to be sufficiently developed and financially efficient, using nuclear fission as a power source is our only real option.

Jamie Males, Cambridge

There is absolutely no evidence that the developed, and now developing, nations are prepared to give up their high standards of living. We are therefore forced to generate more and more electric power. There is no perfect solution, greener sources like wind power can never supply all our needs and so we are forced to use nuclear, which although is not zero carbon, is a thousand times less that using fossil fuels.

Mike Pettman, Chichester

Nuclear might not be zero carbon but when compared to other forms of energy production it is extremely small; way down there with renewables. New build nuclear produces about 8 tonnes of carbon per MWh compared with around 400 for gas and 900 - 1400 for coal (depending on how dirty the coal is). Those wind turbines don't spring up from nowhere and have about the same carbon footprint per MWh as Nuclear if not more. The only real difference is that you'd have to cover the entire coast line of the UK in wind turbines (about 1 every 2km) to meet a fraction of UK demand and then only when the winds blowing. As for turning off light bulbs, are you serious? The amount of electricity saved is on a par with the ridiculous "let's switch all devices off standby" campaign. Electrical devices when on full blast are only 3% of total demand so when on standby their using a fraction of 3%. The most effective method for reducing energy use is to insulate peoples homes. 60% of energ! y goes on heating so logically it's where the most improvements can be made. Go out there and buy some loft insulation made from recycled bottles, some cavity wall insulation and some double or even triple glazing, then come back and tell me to switch off some lights.

Anon, England

Nuclear power supporting a thermal hydrogen generation system is absolutely zero carbon. Not a single molecule of CO2 need be released by any stage of the mining and power generation process once a full nuclear and hydrogen economy is implemented. If Chris would like us to believe nuclear power is not carbon neutral I would like him to tell us where he plans to get the steel, silicon, copper, and other materials for his wind and solar plants without mining for them and how he plans to install the new plants without building them. Heck, I'd like him to tell us what he plans to do with hundreds of thousands of tonnes of waste silicon.

Thomas, Birmingham

"... new stations are better designed and more efficient than those being replaced". The only one nearing completion in 2011 (Olkiluoto Finland), already two years late, is a prototype. So whether it will be more efficient will not be known until around 2012, 2013 or ... if it is delayed further.

John Busby, Bury St Edmunds

What a surprise - an employee of a company owned by BNFL talking up nuclear power. The reality is that nuclear power is a joke: a white elephant, a black hole into which we persist in throwing billions of pounds of taxpayers' money. Will we never learn? And as Chris (below) points out, nuclear power is in no way a carbon free source of energy. And its carbon emissions are only going to rise as the good quality uranium ores are worked out and the industry turns to lower grade sources of fuel. If we're serious about tackling climate change, nuclear power is way down the list of things that we should be doing. We should spend the money on something that might do some good instead.

Eddie, Edinburgh

Yes, Nuclear power is an option - but it comes with a terrible legacy of waste that remains dangerous for thousands of years! The cost of keeping this waste secure under permanent ? safe conditions is enormous and ongoing. Closing down older plants is fraught with problems let alone the huge costs. The govermnent should have encouraged industry to invest in research for more efficient ways of producing energy for homes, cars, air tansport years ago - not like they did know this was coming! The promising technology is there, just needs a kick start with financial inducement and legislative targets to be met by certain dates. The upshot is the oil industry and its dependents are hampering progress in this area for its own ends. Some of the profits have to be channelled into reseach now, the world cannot wait.Green technology will be the end result, power first then refine it! Ignoring this research will inevivitably result in wars and famine - that none of us want or deserve! BCJ

BARRY JOHNSNON, Minster, Sheerness

I agree that nuclear energy needs to be revisited. It has numerous advantages and even the worst case disaster of Chernobyl didn't prove to be anything like as damaging to the environment as first feared, as a Horizon programme a few years ago showed.

Les Howarth, Saffron Walden, UK

Nuclear might not be zero carbon but when compared to other forms of energy production it is extremely small; way down there with renewables. New build nuclear produces about 8 tonnes of carbon per MWh compared with around 400 for gas and 900 - 1400 for coal (depending on how dirty the coal is). Those wind turbines don't spring up from nowhere and have about the same carbon footprint per MWh as Nuclear if not more. The only real difference is that you'd have to cover the entire coast line of the UK in wind turbines (about 1 every 2km) to meet a fraction of UK demand and then only when the winds blowing. As for turning off light bulbs, are you serious? The amount of electricity saved is on a par with the ridiculous "let's switch all devices off standby" campaign. Electrical devices when on full blast are only 3% of total demand so when on standby their using a fraction of 3%. The most effective method for reducing energy use is to insulate peoples homes. 60% of energ! y goes on heating so logically it's where the most improvements can be made. Go out there and buy some loft insulation made from recycled bottles, some cavity wall insulation and some double or even triple glazing, then come back and tell me to switch off some lights.

Anon, England

>a range of independent studies now show that full nuclear life-cycle >costs are competitive with other sources But only if the costs of waste disposal and security are not taken into account. Also neglected are other facts like: * Fission power is at the end of it's technological improvement cycle - it's going to cost a fortune to squeeze another 0.1% of energy out of the fuel, whereas renewables are mostly at the beginning * it takes 10+ years to get a plant running, but we need the energy now * we're already close to "peak uranium", if not past it, and demand is rising - fuel is going to get more expensive and there's no way back * whilst Europe has a very good record on reactor safety, we have an appalling one on disposal of waste and leakage. Our current nuclear option is the most expensive waste of time we can possibly chase, and we, the taxpayer and consumer, are going to get burnt again. We need to forget fission reactors and fund research, building and improvements on renewable energy sources - wind, wave, tidal, solar and fusion power. Our only real "nuclear" option is fast-breeder reactors which no-one seems to mention any more.

Nic Brough, London

Nuclear power is not actually "tried-and-tested carbon free", as stated in the report. A great deal of energy is spent - and carbon used - in the mining and enrichment processes that uranium must go through before it can be considered fuel. It is also hard if not impossible to estimate the costs of decommissioning a nuclear power plant, with further carbon costs. The report also states that nuclear waste is "very small by industrial standards". What is necessary to balance this statement is the fact that there is no current technical solution for the very long-term management of high-level waste, which must be considered. Even deep geological storage of waste does not stop leaching from barrels, change to water tables, and damage from earthquakes. Such sites would therefore need to be managed for thousands of years to ensure they function as intended. Uranium is not a limitless source either. Current estimates put uranium supplies at 200 years left, at today's uranium consumption. The cost of nuclear power must be considered from the entire of the nuclear power generation "cycle". The decision to use nuclear power is an economic one, with short term costs politically hidden away, and the long term costs the burden of another generation. A useful starting point for anyone interested in the topic is a report from the UK's Sustainable Development Commission.

Chris, Glasgow

I see Dr Bleasdale neglets to mention that whilst the new stations will be more efficient than the previous ones, the high burn rates of the uranium that give greater efficiency will cause a range of kncok-on problems. They make the cladding for the fuel rods more brittle, giving clear safety issues that would have to be resolved before any consideration of operation. They also make teh spent elements of the high burn-up fuel far more radioactive than the current waste so, whilst there would be less of it, it would need to be stored much further apart than at present (due to the heat build up) thus meaning it would actually require more storage space.

Jon, Rainham, Kent

The example of France provides the proof that there is a role for nuclear energy. There is a desperate need, particularly in UK, to take the technology forward - an NNL should achieve that. It would seem reasonable to state that the cost of all the damage done by "conventional" fuels so far and into the future exceeds any done to date by nuclear. The underlying fear is always the potential for mis-use of the knowledge.

Timothy Havard, Fife Scotland

Problem is it will take so long to build these reactors we will have run out out of capacity long before they are completed. We need to start building them now and to balance this with Large Tidal (Severn Barrage and Thames BArrage) and Wind. Add to thsi soem biomass and gas and coal near the places where there a re supplies.

Ben Shepherd, Farnham, Surrey

Please, please stop calling nuclear fission "zero carbon" - it is NOT! The new infrastructure and fuel production are both massive sources of emissions during construction, operation and decommissioning phases. As for keeping the lights on - let's turn some off for goodness sake, and there'll be far less of an energy gap which could then be covered by renewables!

Chris, Blewbury, Oxon

Original here