Very, very cool news today: for the first time in history, astronomers have unambiguously observed the exact moment when a star explodes.

Whoa.

The Quick Version

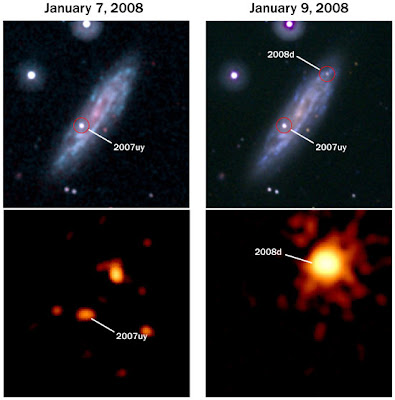

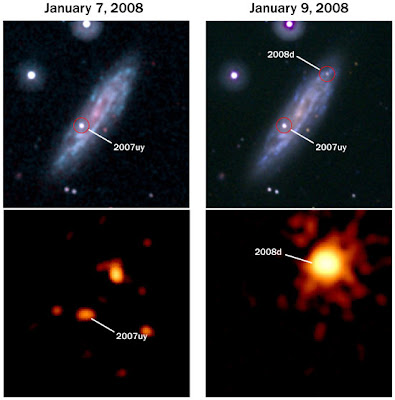

NGC 2770 is a galaxy at the relatively close distance of 84 million light years away. On January 9, 2008, a massive star in it exploded, and instead of finding out days or weeks later, astronomers caught it in the act, right at the moment, in flagrante delicto. The image above, from the Gemini observatory, shows the galaxy and its new supernova.

We’ve seen lots of stars explode; thousands in fact. But because of the mechanics of how a star actually explodes, by the time we notice the light getting brighter, the explosion may be hours or even days old. This time, because it was caught so early, astronomers will learn a whole passel of new knowledge about supernovae.

That’s the brief summary… but this event has a rich back story. Pardon me the lengthy description, but this is very cool stuff, and I think you’ll enjoy having the details.

The Death of a Star

I’ve explained before how massive stars explode. After a few million years of generating energy by fusing light elements into heavier ones (hydrogen to helium, helium to carbon, and so on), the core runs out of fuel. Iron builds up in the very center of the star, and no star in the Universe has what it takes to fuse iron. It’s like ash piling up in a fireplace. At some point, so much iron builds up that it cannot support its own weight, and the ball of iron collapses.

In a millisecond, more than the mass of the Sun’s worth of iron collapses from an object the size of the Earth to a ball only about 20 kilometers across. Weirdly, it happens so quickly that the surrounding layers don’t have time to react, to fall down (think Wile E. Coyote running over a cliff’s edge). While they hesitate and just start to fall, all hell breaks loose below them.

|

| Artist’s drawing of a supernova |

The collapse of the core generates a vast explosion, a shock wave of incomprehensible power that moves out from the surface of the collapsed core into the layers of gas still surrounding it. Like a tsunami of energy, this shock wave works its way up to the surface of the star. The energy is so huge that the material it slams into gets heated to millions of degrees. When the shock wave breaks out through the surface, it bellows its freedom to the Universe with a flash of X-rays, a brief but incredibly brilliant release of high-energy light.

After that flash (what’s called the shock breakout), the explosion truly begins. The outer layers of the star — gas that can have many times the mass of the Sun, octillions of tons — blast outwards. The star tears itself apart, and becomes a supernova.

So — and this is important — the X-ray flash is emitted immediately, but the bright visible light follows it later.

Millions of light years away, astronomers on Earth patrol the sky with telescopes. We use ones that are sensitive to visible light, the kind we see with our eyes. They can see large parts of the sky easily, and that’s good since we never know where the next supernova will be. But that also means we don’t see the shock breakout; we notice the star exploding usually days after the actual event occurs.

We really want to see the supernova as early as possible; the physics, the mechanics of the explosion depend critically on what happens early on, so the younger we see the new supernova, the better we can tune our models and understand precisely how and why stars explode. An X-ray telescope would detect the first moments of the event, but they typically have too small a field of view to catch one as it occurs. The odds are simply way too small, and no one has ever actually witnessed the predicted X-ray flash from the shock breakout.

Until now.

Now, finally, we have seen a supernova go off right as the moment happened. And, ironically, it was an accident, an amazing coincidence, that allowed it to happen.

The Astronomer, the Coincidence, the Mobilization

NASA’s Swift satellite is sensitive to X-rays; it’s designed to detect the flash of light from gamma-ray bursts, which are a particular flavor of supernova. But Swift can also be used to observe older events, too. Alicia Soderberg, an astronomer at Princeton, had gotten time on Swift to observe SN2007uy, a supernova that had exploded the month before. This was a routine observation, and on January 9, 2008, she was actually on travel, ironically giving a talk about supernovae!

When she got back from her talk, she logged onto the Swift archive to look at the data as it came in, and got a huge surprise. There was a second, new source of X-rays in the field of view… and it was incredibly bright. She quickly realized what she was seeing: a new supernova caught in the act, the X-ray flash of the shock breakout detected for the first time. She realized Swift had caught the birth of supernova 2008D as it was happening.

The image above shows the pre- and post-discovery Swift images of the event. The upper images are in ultraviolet light, and show the galaxy NGC 2770. The bottom images show the same field, but in X-rays, where the galaxy itself is dim, but stars and star-forming gas clouds are bright. The images in the left column were taken on January 7, 2008 and the right column two days later, during the shock breakout. You can see how SN2008D is rather unremarkable in ultraviolet, but in X-rays is tremendously bright, washing out everything else in the galaxy.

Mind you, the X-ray flash from the supernova only lasted about five minutes. If Swift had not been looking right at the spot at right at that moment, this once-in-a-lifetime opportunity would have been lost.

That’s how cool this is.

I can only imagine what Soderberg was thinking when she saw that image on the lower right. Wow. But she acted quickly. She and her colleagues immediately mobilized a team of astronomers using telescopes across the world — and above it — to observe the newly born supernova. Using the Gemini telescope (the same one that made the beautiful picture at the top of this post) they quickly got spectra of the event, and it confirmed the event: she had bagged an exploding star. The energies of a supernova are so great that the outer layers explode outwards at a fraction of the speed of light, and velocities measured from SN2008D indicated expansion rates of more than 10,000 kilometers per second — fast enough to cross the entire Earth in just over one second, and faster than the expansion in a typical supernova.

The Aftermath

Because so many people observed this supernova from so early on, a vast wealth of knowledge was collected. The progenitor star probably started out life with about 30 times the mass of the Sun. Over a few million years, it shed quite a bit of its mass through a dense, super-solar wind, blowing off most (but probably not all) of its outer layers. When the core collapsed, the shock wave tore through what was left of the star’s envelope. Because there wasn’t as much material as usual surrounding the core, the energy of the blast could accelerate the gas outward at an unusually high speed. It’s also been determined that the explosion wasn’t symmetric: it wasn’t a perfect sphere, with the gas expanding in every direction equally. Instead it was off-center, with gas on one side of the explosion moving outward faster than on the other. This has been seen before, but never so early on.

All of this adds up to an incredible boon for astronomers. The observations collected are yielding a huge amount of information not just on this particular event, but on the basic parameters of supernova explosions themselves. Because of this happy coincidence — a new supernova occurring near an older one, and just as a powerful and sensitive X-ray observatory was pointed in the right direction, and with attentive scientists keeping an eye on their data — astronomers will take a huge step forward in understanding these tremendous explosions.

The Importance

You should understand something else here, too. As the blast wave moves through the gas of the star, the elements in that gas undergo an explosive fusion, creating new, heavier elements. Elements like iron, calcium, and gold. The hemoglobin in your blood, the bones in your body, and the wedding ring on your finger — all of these can trace their lineage back to a star that exploded like SN2008D. Every heavy element in the Universe was created in such an event, in the heart and fury of a supernova.

We owe our very existence to stars that explode.

That’s why work like this is important. Through science like this we can determine our own origins, from the hydrogen that formed a millisecond after the Big Bang, through elements built up in normal stars like the Sun, through heavy elements created in supernovae… to us.

That’s where science leads. We look out to the farthest reaches of the Universe, and we wind up seeing ourselves.

Original here

What if you could convince people to trust you and take risks for you with just a few drops of liquid surreptitiously placed in their water? There would be no drunkenness, no rufie-esque glazed eyes: just pure, human trust created via chemicals. The person wouldn't even know they'd been dosed. A study coming out tomorrow in the journal Neuron explains how this scenario is possible today, with just a small dose of the brain chemical oxytocin.

What if you could convince people to trust you and take risks for you with just a few drops of liquid surreptitiously placed in their water? There would be no drunkenness, no rufie-esque glazed eyes: just pure, human trust created via chemicals. The person wouldn't even know they'd been dosed. A study coming out tomorrow in the journal Neuron explains how this scenario is possible today, with just a small dose of the brain chemical oxytocin.  The Sunshine State might have a lot of catching up to do when it comes to solar energy installations, but it’s now on a fast track toward big improvements.

The Sunshine State might have a lot of catching up to do when it comes to solar energy installations, but it’s now on a fast track toward big improvements.