Happy birthday, Universe!

Kinda. It’s not really the Universe’s birthday, but now we do know to high accuracy just how old it is.

How?

NASA’s WMAP is the Wilkinson Microwave Anisotropy Probe (which is a mouthful, and why we just call it WMAP). It was designed to map the Universe with exquisite precision, detecting microwaves coming from the most distant source there is: the cooling fireball of the Big Bang itself.

New results just released from WMAP have nailed down lots of cool stuff — literally — about the Universe.

I am about to explain the early Universe to you. I’ll be brief, but if you want to skip to the results, then go ahead.

Here’s the quick version: the Big Bang was hot. The Universe itself expanded outward from a single point — actually, it’s space itself that expands, not the objects in it — and like any expanding gas it cooled. After about a microsecond, it had cooled enough for protons and neutrons to form. Three minutes later (yes, just three minutes) it had cooled enough for protons and neutrons to stick together. Hydrogen, helium, and just a dash of lithium were created, and these would be the only elements for some time (hundreds of millions of years, in fact). The Universe was a thick soup of matter and energy.

It kept expanding and cooling. At this point, it was opaque to light. A photon couldn’t travel an inch without smacking into an electron and then getting sent off in some other random direction. However, after a few hundred thousand years, an amazing thing happened: neutral hydrogen could form. Before this point, the Universe was still too hot; as soon as an electron bonded with a proton, some ultraviolet photon would come along and whack it off. But at that golden moment the cosmos had cooled off enough that a lasting atomic relationship was in the offing. Neutral hydrogen was born. At that moment — astronomers call it recombination, which is a misnomer, since it was the first time electrons and protons could combine — the Universe became transparent; without all those pesky electrons floating around, photons found themselves free to travel long distances.

It’s those photons WMAP sees. After 13.7 billion years, the expansion of the Universe has cooled the light, stretched its wavelength from ultraviolet to microwave. Another way to think about it is that the temperature associated with each photon went from thousands of Kelvins down to just a few, less than 3, in fact. That’s -270 Celsius, and -454 Fahrenheit.

Brrrr.

That light emitted just after recombination tells us a vast amount about the Universe at that time. By carefully mapping the exact wavelength of the light and the direction from where it came, we can tell the density and temperature of the matter at that time. Incredibly we can also tell how much dark energy there was, and even the geometry of the Universe: whether it is flat, open, or closed.

All this, from the dying glow of the Big Bang itself.

WMAP Results

A lot of this information was determined a while back, just a couple of years after WMAP launched. But now they have released the Five Year Data, a comprehensive analysis of what all that data means. Here’s a quick rundown:

1) The age of the Universe is 13.73 billion years, plus or minus 120 million years. Some people might say it doesn’t look a day over 6000 years. They’re wrong.

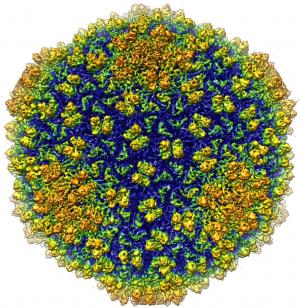

2) The image above shows the temperature difference between different parts of the sky. Red is hotter, blue is cooler. However, the difference is incredibly small: the entire temperature range from cold to hot is only 0.0002 degrees Celsius. The average temperature is 2.725 Kelvin, so you’re seeing temperatures from 2.7248 to 2.7252 Kelvins.

3) The age of the Universe when recombination occurred was 375,938 years, +/- about 3100 years. Wow.

4) The Universe is flat.

5) The energy budget of the Universe is the total amount of energy and matter in the whole cosmos added up. Together with some other observations, WMAP has been able to determine just how much of that budget is occupied by dark energy, dark matter, and normal matter. What they got was: the Universe is 72.1% dark energy, 23.3% dark matter, and 4.62% normal matter. You read that right: everything you can see, taste, hear, touch, just sense in any way… is less than 5% of the whole Universe.

We occupy a razor thin slice of reality.

There are other important things that have come from the WMAP data, and if you’re interested, you can read all about them on the WMAP site and in the professional journal papers.

But if you only want to peruse the results I’ve highlighted here, that’s fine too. But remember this, and remember it well: you are living in a unique time. For the first time in all of human history, we can look up at the sky, and when it looks back down on us it reveals its secrets. We are the very first humans to be able to do this… and we have the entire future of the Universe ahead of us

Original here

We all know that fast cars are fun and fuel-sipping cars are environmentally responsible, but is there a middle ground?

We all know that fast cars are fun and fuel-sipping cars are environmentally responsible, but is there a middle ground?